Understanding Kubernetes Container Runtime: CRI, Containerd and Runc Explained

Grasping the complex world of container orchestration infrastructure in Kubernetes is hard, given the amount of APIs and tools at play. This article seeks to clear out the complexities, offering clarity on both the high level architecture and the components involved. By breaking down the high-level framework and digging into the specifics of each component, we aim to resolve any ambiguities and shed light on the critical aspects of container orchestration

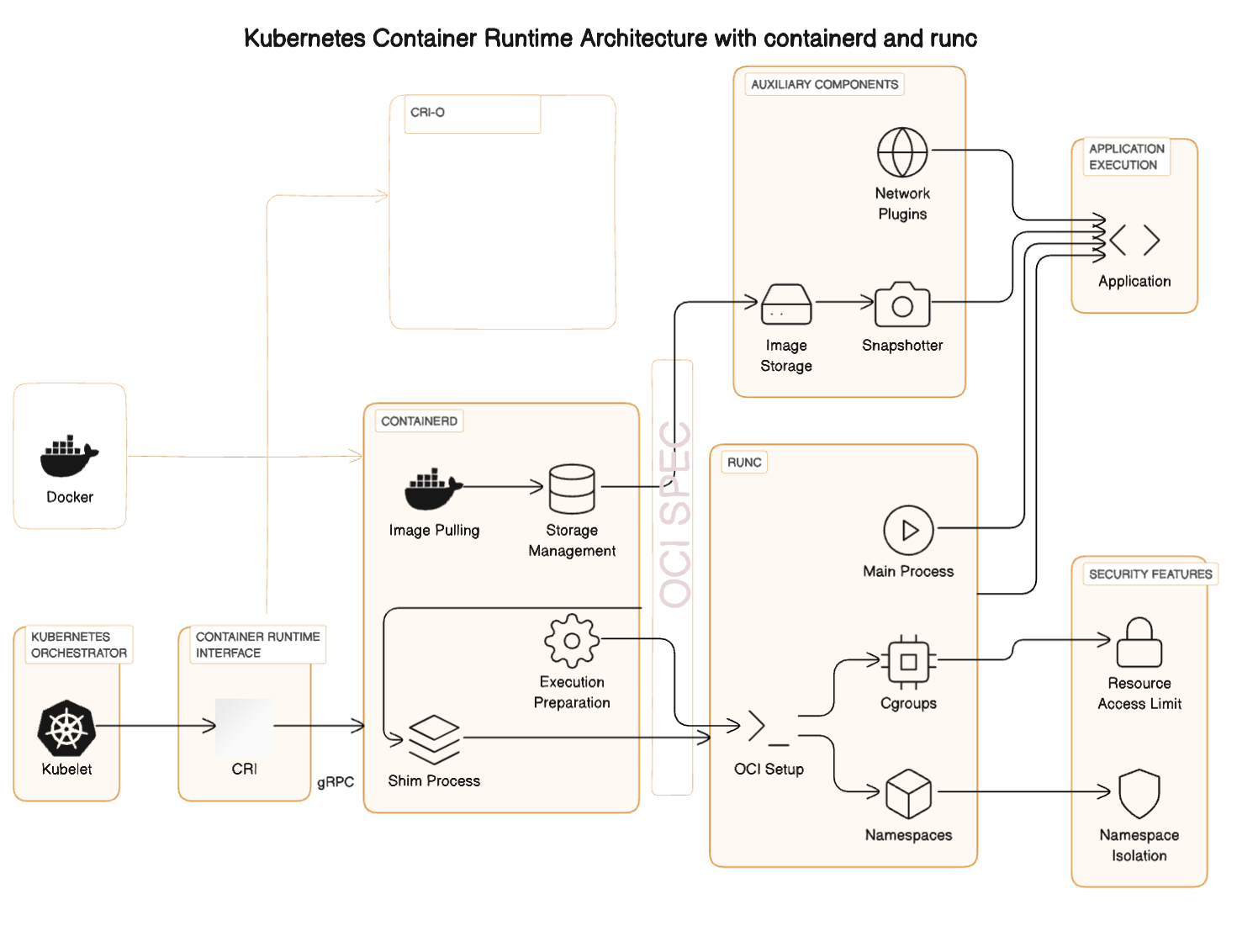

The diagram below illustrates the high-level architecture, detailing how various layers collaborate to ultimately execute an application. Moreover, it represents a continuous lifecycle management process, encompassing when to initiate or halt, when to apply updates, and how to isolate processes. This visualization serves as a guide to understanding the dynamic interplay and management strategies within container orchestration

Heads up ❕ Navigating the maze of container orchestration is like trying to solve a Rubik's Cube. While I aim to shed some light on the subject, it's important to remember that the complexity is pretty high, and the chance of crossing paths with a few inaccuracies is as likely as finding a needle in a haystack. My goal is to arm you with a decent understanding, but uncovering the absolute truth...huh..

- Kubernetes Orchestrator: Kubernetes, via the kubelet, decides to start a new container based on the PodSpecs it has.

-

Container Runtime Interface (CRI): Kubernetes API. The kubelet communicates with

containerdthrough the CRI, instructing it to start a new container. It is a critical component in the Kubernetes, designed to abstract the container runtime from the kubelet so that Kubernetes can use various container runtimes without needing to integrate with each one directly. This interface is crucial for maintaining Kubernetes' extensibility and flexibility. -

Runtimes: There are several runtimes to choose from.

-

containerd: Receives the command and prepares the container's environment. This includes pulling the necessary image if it's not already available locally, setting up the necessary storage, and other preparatory tasks. Containerd is part of the Cloud Native Computing Foundation (CNCF) and is backed by several industry leaders, including Docker, Google and IBM.

- CRI-O: fully compatible with the Kubernetes Container Runtime Interface (CRI) standard. This means it seamlessly integrates with Kubernetes and allows for the deployment of containerized applications within a Kubernetes cluster. Adheres to the Open Container Initiative (OCI) standards, ensuring that it can work with any OCI-compliant container image. This compatibility means you can use container images created with Docker or other OCI-compliant tools within CRI-O. Red Hat has been a significant contributor to CRI-O.

-

-

Shim Process: Before

runcexecutes the container,containerdstarts a shim process for (each) container. This shim sits betweencontainerdandrunc, managing the container's lifecycle oncerunchas initialized it, handling stdout/stderr, and collecting exit codes. Withps aux | grep containerd-shimwe can conclude that for each container,containerdstarts acontainerd-shimprocess:

While there is only onecontainerddaemon running on the node to manage all containers, there is a separatecontainerd-shimprocess for each container, facilitating robust, isolated management of each container's lifecycle. It allows containerd to manage the container's lifecycle without having to directly interact with the container's process. This includes starting, stopping, and managing I/O for the container. -

runc: Once everything is set up by

containerd,runcis called to create and run the container.runcsets up the namespace, cgroups, and other isolation features according to the OCI specification, and then starts the container's main process (the application). runc is actually a cli for running containers, but it's often used indirectly through higher-level container management tools or runtimes (read more here)

-

Application Execution: The application within the container runs, isolated from other processes on the host thanks to the namespaces, cgroups, and other security mechanisms implemented by

runcand managed bycontainerd. -

Auxiliary Components: Throughout this process, auxiliary components such as image storage, snapshotter, and network plugins are involved to ensure the container has access to the filesystem, network, and other resources it needs.

How Kubelet Uses CRI with containerd

-

Initiation: When Kubernetes decides to start a new container as part of a pod, the kubelet on the node where the pod is scheduled sends a command to the container runtime through the CRI.

-

Communication: The kubelet communicates with

containerd(or any other configured container runtime) using the CRI's gRPC APIs. This communication is facilitated over a UNIX socket, ensuring secure and efficient message passing. -

Container Creation Flow:

- Pull Image: If the required container image is not already present on the node, the kubelet instructs

containerdto pull the image from a specified registry using theImageServiceAPI. - Start Container: Once the image is available locally, the kubelet uses the

RuntimeServiceAPI to instructcontainerdto create and start a new container instance based on that image. This involves setting up the container's environment according to the specifications provided in the pod definition, such as volume mounts, environment variables, network settings, and security configurations. - Container Execution:

containerd, upon receiving the command, performs the necessary steps to start the container. This includes usingruncor another OCI (Open Container Initiative) compatible runtime to create a container process.containerdmanages the container's lifecycle, monitoring its health and relaying logs and metrics back to the kubelet.

- Pull Image: If the required container image is not already present on the node, the kubelet instructs

-

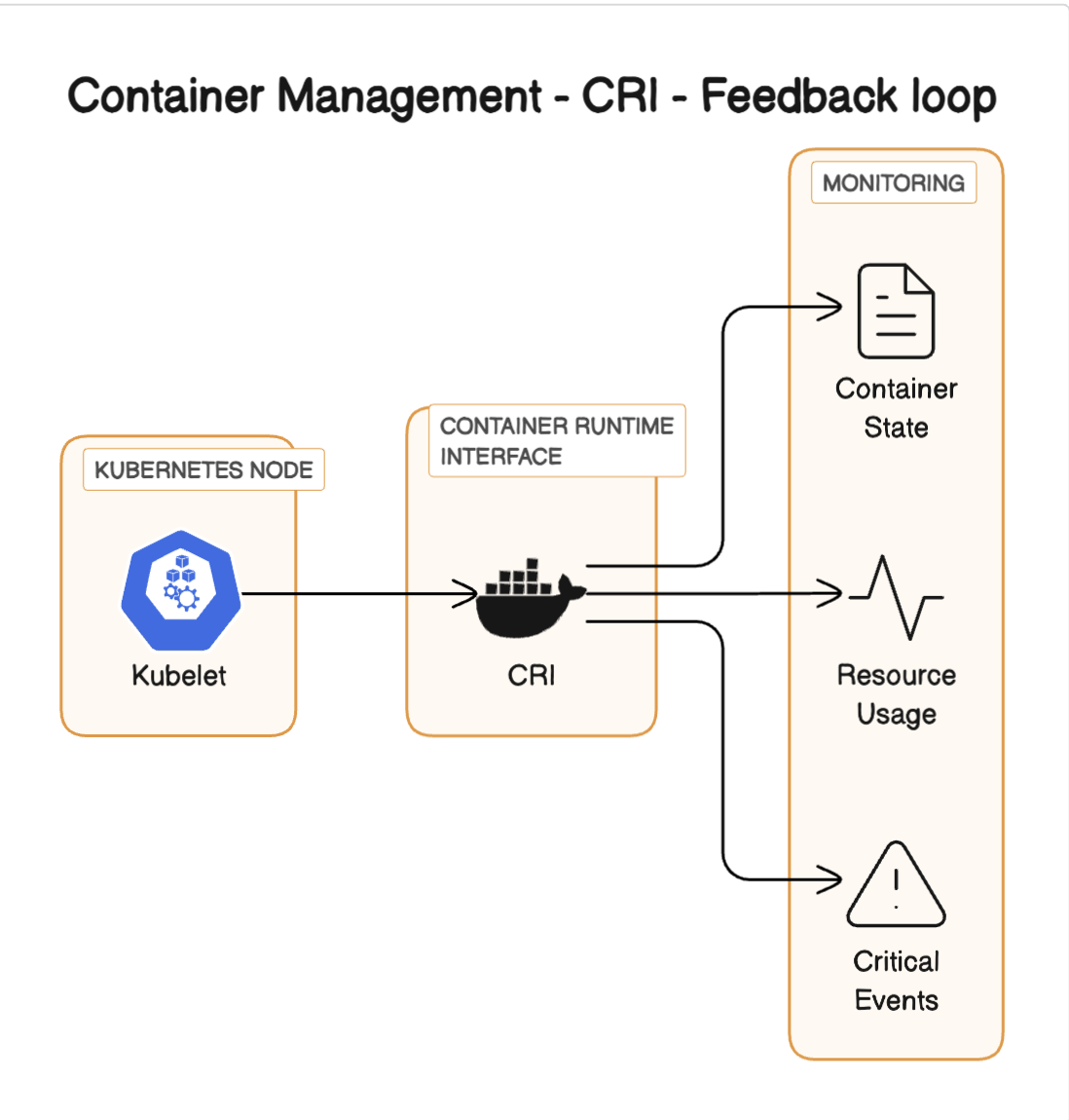

Feedback Loop: The kubelet continuously monitors the status of the container through the CRI, receiving updates about the container's state, resource usage, and any critical events. This information is used to manage the container's lifecycle, such as restarting failed containers or cleaning up completed ones

-

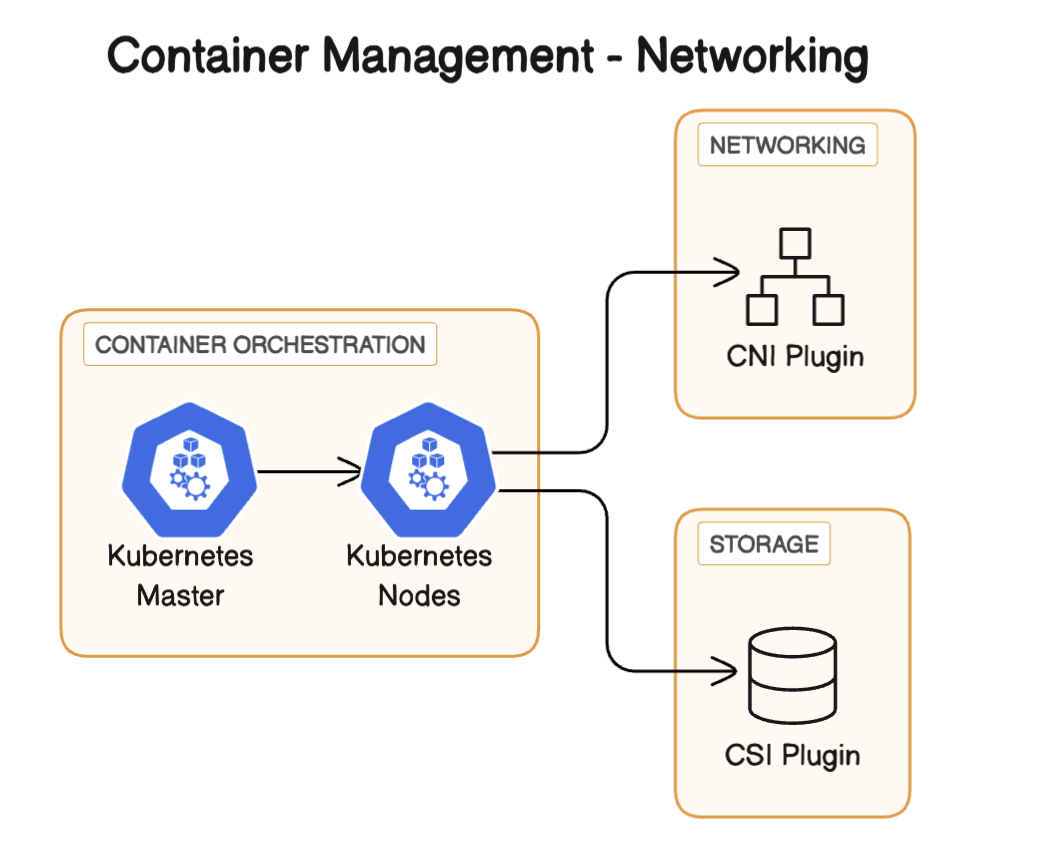

Networking and Storage: Alongside container management, the CRI (in conjunction with other Kubernetes components like CNI for networking and CSI for storage) ensures that the containers have the necessary network access and storage resources as defined in their configurations.

Standards in Containerization - OCI and CRI

The pre-standardization era was a time of rapid innovation but also posed significant challenges that shaped the future of containerization. Until 2015-2016, the times were marked by a proliferation of diverse container formats and runtimes, the interoperability hurdles that developers and operators faced, and the risks of vendor lock-in that emerged as a result.

The Open Container Initiative (OCI) and Container Runtime Interface (CRI) are both crucial standards in the containerization ecosystem, playing important roles in how containers are created, managed, and run.

Open Container Initiative (OCI)

The Open Container Initiative (OCI) is a project under the Linux Foundation that aims to develop open standards for container formats and runtimes. The OCI specifications primarily focus on two aspects: the Runtime Specification (runtime-spec) and the Image Specification (image-spec). The Runtime Specification outlines how to run a container, based on a container image that complies with the Image Specification. The goal is to ensure interoperability between different container tools and platforms, such as Docker, Podman, and others that adhere to OCI standards.

Container Runtime Interface (CRI)

Container Runtime Interface (CRI), on the other hand, is a plugin interface defined by Kubernetes. It specifies how a container orchestrator (like Kubernetes) should interact with container runtimes to manage the lifecycle of containers on a node. CRI is designed to abstract away the specifics of container runtimes from Kubernetes' core code, enabling users to switch between different runtimes (like containerd, CRI-O, and others) without having to change Kubernetes itself. This decouples Kubernetes from specific container runtimes, promoting flexibility and extensibility.

Why Kubernetes Transitioned Away from Docker

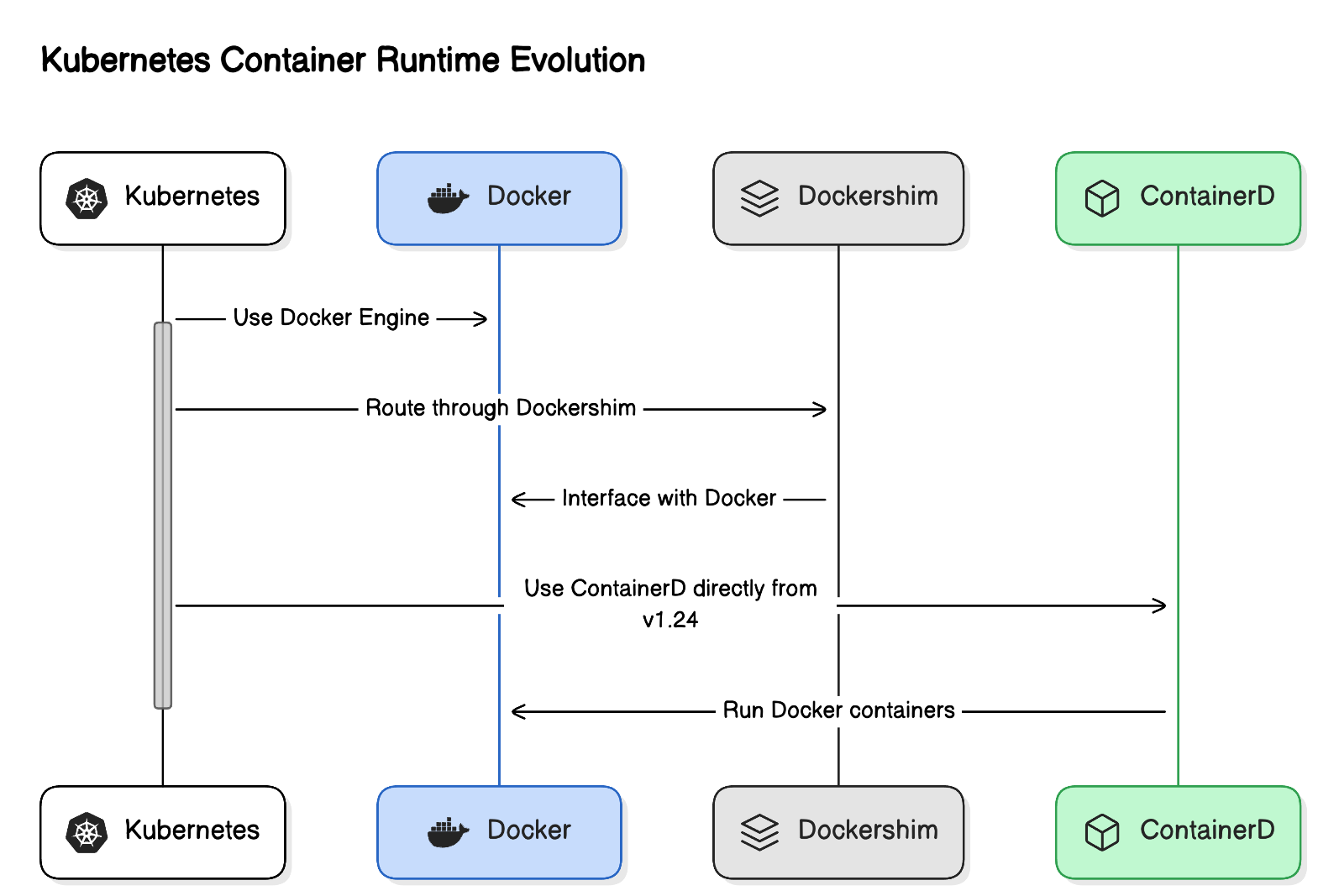

Firstly, it's important to clarify that Kubernetes did not phase out Docker in the way many might assume. Rather, it moved away from the Docker shim.

Kubernetes continues to support Docker as a container runtime indirectly. Docker containers themselves are run by ContainerD, which is fully compatible with Kubernetes through the Container Runtime Interface (CRI). This negated the need to maintain the less efficient Docker shim as a separate intermediary layer. Unlike Docker, other container runtimes interface directly with Kubernetes via the CRI.

The main reason Kubernetes distanced itself from Docker is due to Docker's reliance on a different interface mechanism. Kubernetes interacts with container runtimes through the Container Runtime Interface (CRI), designed to provide the necessary functionalities directly. Docker, by contrast, utilizes its engine, which requires a component known as Dockershim to bridge the gap to Kubernetes.

Eliminating Dockershim reduces the complexity and maintenance burden for Kubernetes developers and operators. By supporting container runtimes that natively implement the CRI, Kubernetes aims to simplify backend interactions and enhance overall system performance.

Here is a diagram that visualizes the change made:

Improvements of the Transition

- Operational Clarity: Removing the dependency on Dockershim simplifies the container runtime layer, making it easier for cluster administrators to manage and troubleshoot Kubernetes environments.

- Performance and Flexibility: Directly using CRI-compliant runtimes like containerd and CRI-O, which are both part of the Cloud Native Computing Foundation (CNCF) landscape, can lead to better performance and flexibility. These runtimes are optimized for Kubernetes environments and provide a leaner, more focused approach to running containers.

- No Impact on End Users: It's crucial to note that this change does not affect the development process for individuals and teams that use Docker to build their container images. Docker images remain compliant with the OCI standards, meaning they can be run by any CRI-compliant container runtime without modification.

❗Please Note: Kubernetes' shift away from using Docker directly as a container runtime through the deprecation of Dockershim often leads to misunderstandings about Kubernetes' support for Docker. The key point is that Kubernetes is not abandoning Docker; rather, it is evolving its architecture to be more efficient and flexible in how it interacts with container runtimes.

Some Tooling

In this section we will introduce some cli tools related to the container runtimes and Container Runtime Interface.

Here is a table showing the tools that we will be introduced to:

| Tool | Purpose | Key Functionalities | works with |

|---|---|---|---|

| ctr | Low-level container management directly with containerd |

|

containerd |

| nerdctl | Provides Docker-compatible commands for managing containers using containerd |

|

containerd |

| crictl | Interface with the Container Runtime Interface (CRI) used by Kubernetes |

|

with all CRI compatible runtimes |

ctr tool

ctr is a command-line interface (CLI) tool designed for interacting directly with containerd, an industry-standard core container runtime. As part of the broader containerd project, ctr serves as a low-level utility meant primarily for debugging and development purposes rather than for production use. It provides administrators and developers with a direct and unabstracted interface to manage container and image operations within the containerd runtime.

nerdctl tool

nerdctl is a command-line tool that provides Docker-compatible CLI functionality for managing containers within the containerd ecosystem. As containerd serves as a low-level container runtime in Kubernetes environments, nerdctl extends its capabilities by offering a more familiar and accessible interface for developers and operators who are accustomed to Docker's commands.

crictl tool

crictl is a command-line interface tool specifically designed for interacting with the Container Runtime Interface (CRI) in Kubernetes environments. The CRI is a plugin interface which enables Kubernetes to use a variety of container runtimes, without needing to integrate them directly into Kubernetes' core code. crictl provides essential functionalities to manage the lifecycle of containers and images directly through the CRI, making it a critical tool for debugging and operational tasks in a Kubernetes cluster.

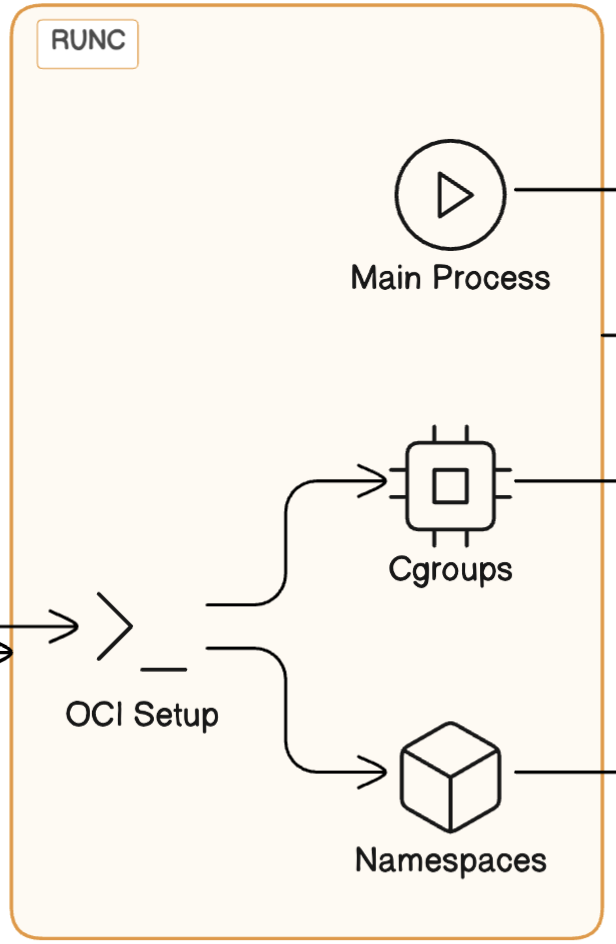

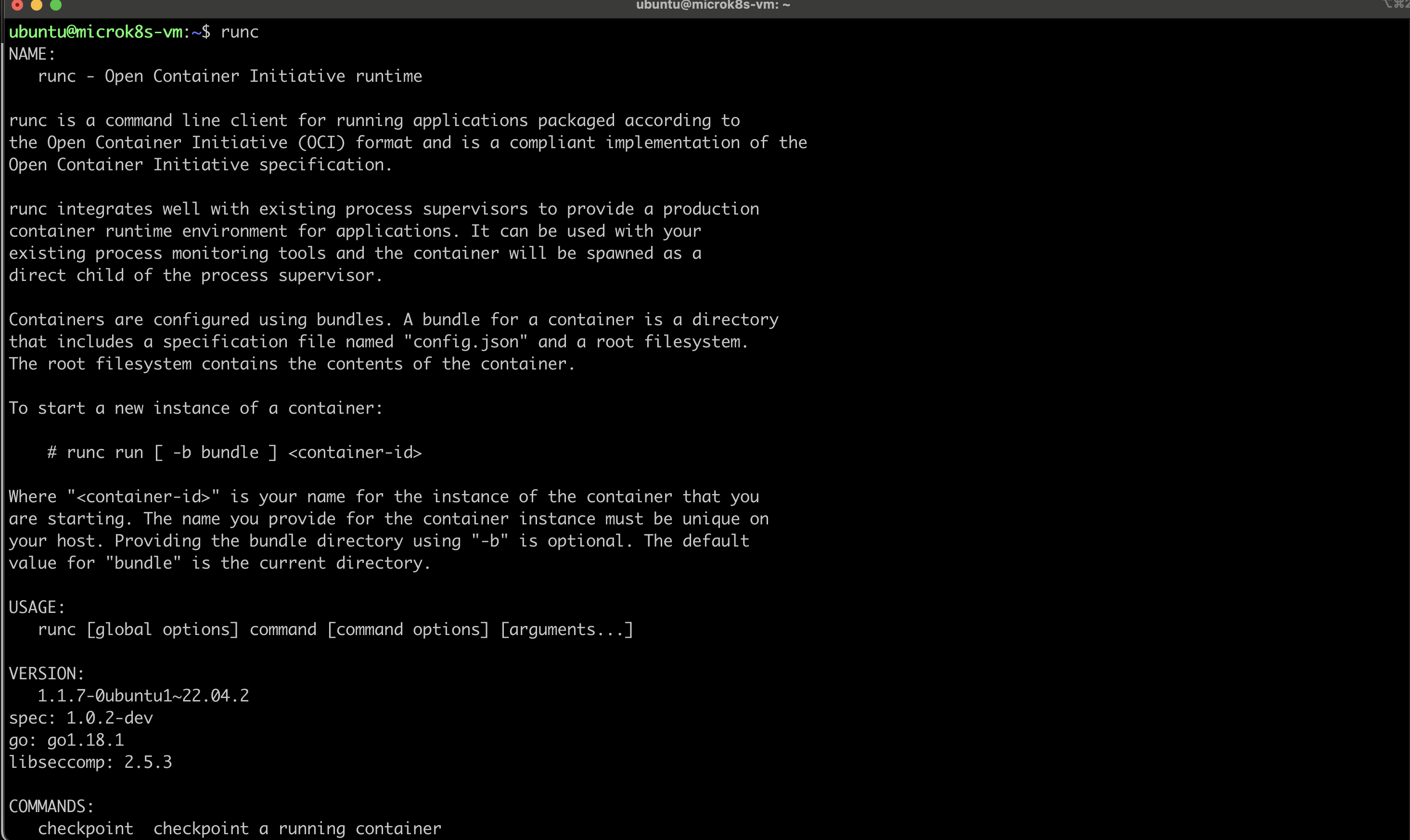

Overview of runc

- Open Container Initiative (OCI):

runcis the reference implementation of the OCI Runtime Specification, which defines how to run containers on a Linux system. - Functionality:

runcis responsible for the actual process of creating and running containers. It takes a container configuration (specified in a JSON format defined by the OCI) and uses Linux system calls to set up the container's environment and then run the container's main process. This involves configuring namespaces, cgroups, security features, and other isolation mechanisms provided by the Linux kernel.

-

Linux Namespaces (not K8s namespaces):

runcmakes extensive use of Linux namespaces to provide isolation between containers. Namespaces ensure that each container has its own isolated view of the system, including the process tree, network interfaces, mount points, and user IDs. -

Control Groups (cgroups): It utilizes cgroups to limit and monitor the resources a container can use. This includes CPU time, memory, network bandwidth, and disk I/O, which helps prevent any single container from exhausting the host's resources.

-

Root Filesystem:

runcmounts the container's root filesystem as specified in the container's configuration. This filesystem is usually provided as a layered filesystem based on a container image, allowing containers to be lightweight and start quickly. -

Security: It applies various security mechanisms such as SELinux, AppArmor profiles, and seccomp filters to restrict the actions a container process can perform, significantly reducing the attack surface of the container.

-

Execution Flow: The typical execution flow of

runcinvolves reading the container's specification (theconfig.json), setting up the environment according to this specification (namespaces, cgroups, filesystem mounts, etc.), and then executing the container's main process. After execution,runcexits, leaving the container process running in the configured environment.

Usage and Integration

- Direct Use: While it's possible to use

runcdirectly to run containers, it's often used indirectly through higher-level container management tools or runtimes that handle more complex scenarios, such as image management and network configuration. - Integration with Container Runtimes: Higher-level container runtimes like

containerdandCRI-Ouseruncto run containers. These tools manage the lifecycle of containers (e.g., image pulling, storage management, networking) and useruncfor the lower-level task of creating and running the container processes.

Assess Yourself On Knowledge

-

What is the purpose of the Container Runtime Interface (CRI) in Kubernetes?

- A) To provide a graphical interface for managing containers

- B) To abstract the container runtime from the kubelet, allowing Kubernetes to use different container runtimes

- C) To increase the complexity of Kubernetes

- D) To replace Docker as the only container runtime for Kubernetes

-

Which component is responsible for pulling the necessary image if it's not already available locally?

- A) Kubernetes Orchestrator

- B) Shim Process

- C) containerd

- D) runc

-

What role does the shim process play in container management?

- A) It replaces the kubelet in Kubernetes architectures.

- B) It manages the container's lifecycle after runc has initialized it, including handling stdout/stderr and collecting exit codes.

- C) It directly executes the container's main process.

- D) It serves as the primary interface between Kubernetes and the underlying container runtime.

-

How does Kubernetes decide when to start a new container?

- A) Based on the PodSpecs provided to the Kubernetes Orchestrator.

- B) Through manual intervention by the system administrator.

- C) Automatically at predetermined intervals.

- D) Using external monitoring tools outside of Kubernetes.

-

Which tool is described as a CLI tool for running containers that sets up namespaces, cgroups, and other isolation features?

- A) Docker

- B) Kubernetes Orchestrator

- C) containerd

- D) runc

-

What is the primary reason Kubernetes transitioned away from Docker as its container runtime?

- A) Docker's performance issues

- B) Docker's use of a different container runtime interface than what Kubernetes requires

- C) The high cost of using Docker

- D) Security vulnerabilities in Docker

-

Which of the following is NOT a role of containerd?

- A) Pulling images from a registry

- B) Managing the container's lifecycle

- C) Interfacing directly with Kubernetes via CRI

- D) Executing the container's main process

-

What does OCI stand for, and what is its purpose?

- A) Open Container Initiative; to develop open standards for container formats and runtimes

- B) Operational Container Integration; to integrate containers into operational workflows

- C) Official Container Institute; to certify container technologies

- D) Open Code Initiative; to promote open-source coding standards

-

How does the kubelet communicate with container runtimes?

- A) Via HTTP REST APIs

- B) Using SSH connections

- C) Through the CRI's gRPC APIs over a UNIX socket

- D) Directly executing shell commands

-

What significant change does the transition away from Dockershim in Kubernetes indicate?

- A) Kubernetes will no longer support containers.

- B) Kubernetes aims to streamline operations and improve performance by supporting CRI-compliant runtimes directly.

- C) Kubernetes is moving away from open-source technologies.

- D) The Docker company is discontinuing Docker.

Answers: B, C, B, A, D, B, D, A, C, B

Conclusion

In summary, exploring the Kubernetes container runtime ecosystem, which includes CRI, containerd, and runc, provides insight into how container orchestration functions.

The Container Runtime Interface (CRI) acts as a middle layer, allowing Kubernetes to work with different container runtimes. Containerd and runc are crucial for managing containers throughout their lifecycle and ensuring they run securely and efficiently within Kubernetes.

The transition from Docker to CRI-compliant runtimes reflects a focus on performance and consistency. This shift aligns with the Open Container Initiative (OCI) to promote compatibility across various container technologies.

Using open standards instead of relying heavily on Docker helps reduce dependency and promotes interoperability within the container technology landscape.