Scaling ArgoCD on AWS EKS: Insights from AWS Open Source Blog

📣The AWS Open Source Blog recently published an article where the AWS team conducted experiments on the scalability of Argo CD within AWS EKS. They tested it by deploying 10,000 applications across 1, 10, and 97 remote clusters, which is pretty impressive if you ask me. If you're using Argo CD to manage your applications running in your clusters, this is a must-read. Super interesting!

link: https://aws.amazon.com/blogs/opensource/argo-cd-application-controller-scalability-testing-on-amazon-eks/

The primary goal of the AWS experiment is to rigorously test and analyze the scalability thresholds of Argo CD, focusing on its ability to manage 10,000 Argo CD applications across an extensive number of clusters.

By simulating the deployment of 10,000 Argo CD applications to clusters ranging from a single instance to nearly a hundred, AWS aims to identify critical scalability bottlenecks and develop strategies to mitigate them. This initiative not only serves to enhance the user experience on EKS but also contributes to the broader open-source community by sharing findings and improvements.

Here are some clarifications that I think are good to read before reading the AWS experiment.

ArgoCD Components

| Component | Purpose |

|---|---|

| application-controller-0 | Manages the lifecycle of applications and continuously reconciles the application state from the desired state defined in the Git repository. |

| applicationset-controller | Provides the ability to manage multiple Argo CD applications as a set. It defines a template and a generator, where the template defines the application and the generator provides parameters for creating multiple instances. |

| dex-server | Provides an identity service with OpenID Connect (OIDC) that can connect to multiple identity providers for Argo CD to authenticate users. |

| notifications-controller | Manages notifications for Argo CD, such as sending alerts or updates related to the application's status changes. This is configurable and can integrate with various messaging services like Slack, email, etc. |

| redis | Implements a Redis data store, used by Argo CD for caching and high performance data retrieval requirements. |

| repo-server | Serves as a service which maintains a local cache of the repository holding the application manifests. It is also responsible for generating and returning the manifests to the application controller. |

| argocd-server | Provides the API and the user interface for Argo CD, allowing users to visualize application activities, manage configurations, and interact with the Argo CD system. |

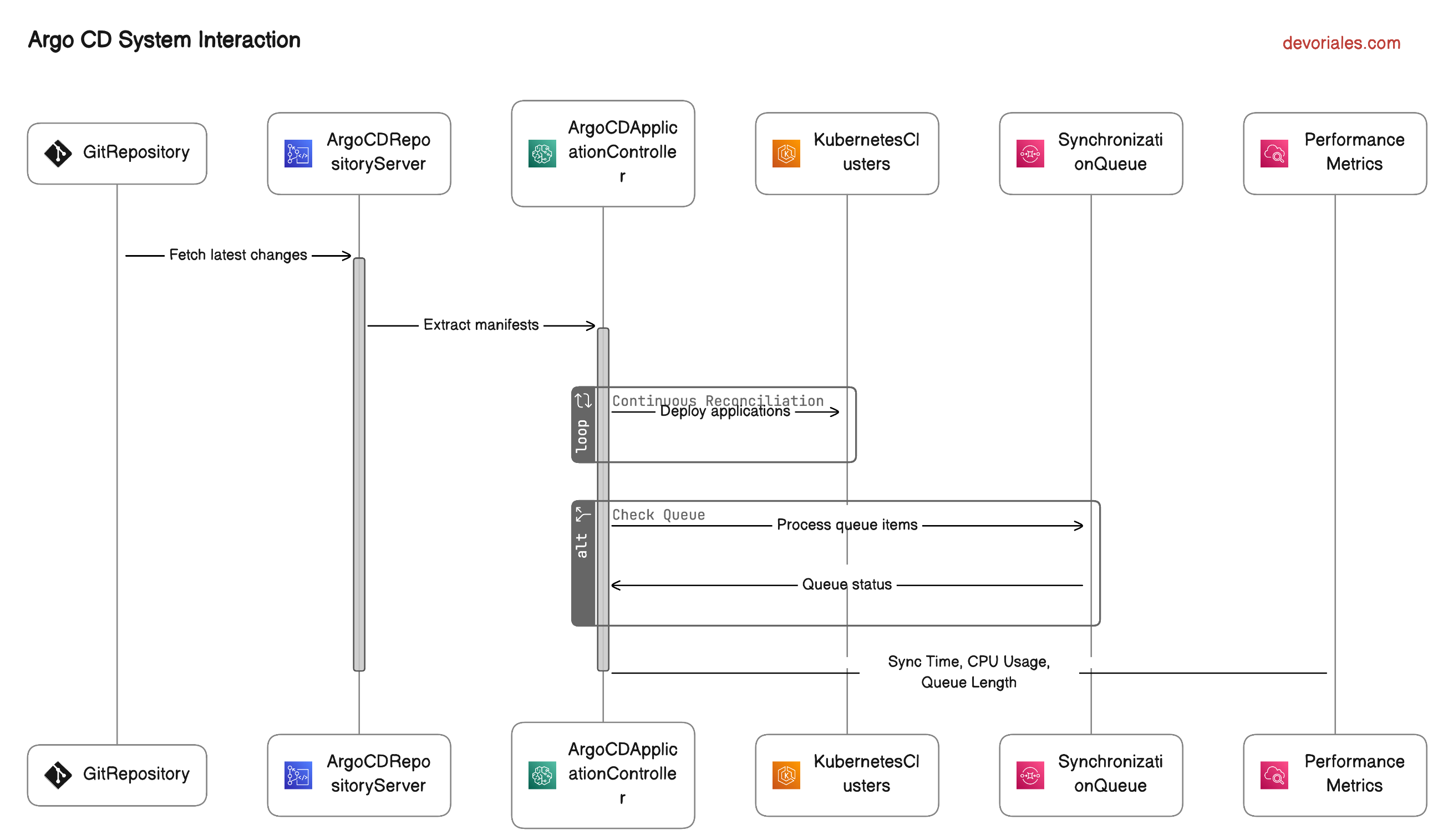

Diagram for some clarifications

The following diagram illustrates the flow of data and commands through Argo CD's architecture and also highlights the critical roles played by each component in ensuring that the desired state of applications defined in Git is accurately reflected in live Kubernetes environments.

From fetching the latest changes in a Git repository to deploying these changes across multiple Kubernetes clusters, the diagram encapsulates the complete lifecycle of a synchronization process:

Workflow Explanation:

- The process starts at the Git Repository, where any changes trigger the Argo CD system.

- The Argo CD Repository Server fetches these changes, activating its role in the workflow.

- The Argo CD Application Controller then extracts these manifests and begins the reconciliation process, continuously deploying updates to the Kubernetes Clusters to ensure the actual state matches the desired state.

- Parallel to this, the Synchronization Queue manages the order and priority of tasks, potentially becoming a bottleneck if overloaded. This queue's status is regularly checked and fed back to the application controller.

- Throughout this process, Performance Metrics are monitored to ensure the system's efficiency and to quickly identify and address any issues that may arise.

In Argo CD, various configurations can be adjusted to optimize synchronization and reconciliation processes, helping to handle changes efficiently and maintain application state consistently across Kubernetes clusters. These settings can be fine-tuned based on the scale of deployment, the number of managed resources, and specific performance needs. Here are the primary configuration settings related to sync and reconciliation in Argo CD:

Configurations

In this section we will go through the parameters that are available and that we can set. Again mostly related to the article.

1. Resource Settings in Application Controller:

This section of the ConfigMap is where you specify various configurations that influence how the Argo CD application controller behaves. In this particular example, you are configuring the number of processors dedicated to handling status updates and operational tasks.

controller.status.processors

- Purpose: This setting specifies the number of concurrent workers that the application controller allocates for processing status updates.

- Function: Status processors are responsible for monitoring and updating the status of resources managed by Argo CD. This includes checking if the actual state of a resource in the Kubernetes cluster matches the desired state defined in the Git repository.

- Impact: Increasing the number of status processors can improve the responsiveness and accuracy of status reporting in the Argo CD UI and APIs, especially in large deployments where many resources need continuous monitoring. It helps in quicker status reconciliation but requires more CPU and memory resources.

- Default value: 1

controller.operation.processors

- Purpose: This setting defines the number of concurrent workers that handle the operations tasked with syncing the actual state of resources in Kubernetes with the desired state defined in the Git repositories.

- Function: Operation processors manage the execution of create, update, and delete operations on Kubernetes resources as defined by the Git repository configurations. They actively change the state of resources to ensure they match what's specified in Git.

- Impact: Setting a higher number of operation processors can significantly speed up the synchronization process, allowing Argo CD to handle more simultaneous sync operations. This is particularly useful in environments with a high rate of change or when managing a large number of applications. Similar to status processors, more operation processors will also increase the resource (CPU and memory) usage.

- Default value: 1

Example:

apiVersion: v1

kind: ConfigMap

metadata:

name: argocd-cmd-params-cm

data:

# Setting for changing the status processors

controller.status.processors: 10

# Setting for changing the operation processors

controller.operation.processors: 20Considerations

- Resource Usage: Both settings increase the parallelism of the Argo CD application controller. While this can improve performance by handling more tasks simultaneously, it also increases the resource demands (CPU and memory) on the Kubernetes cluster where Argo CD is running.

- Tuning Advice: The optimal numbers for these settings depend on the specific needs of your environment, including the number of applications managed, the frequency of updates, and the capacity of your Kubernetes cluster. It's recommended to start with the default or a moderate value and monitor the system's performance, adjusting as necessary based on the observed load and response times.

2. Client QPS and Burst Settings:

-

ARGOCD_K8S_CLIENT_QPSandARGOCD_K8S_CLIENT_BURST: These settings determine the rate at which the Argo CD application controller can make requests to the Kubernetes API. The QPS (Queries Per Second) limits the rate of sustained operations, while Burst defines the peak rate for short bursts. Adjusting these values can help prevent throttling by the Kubernetes API, especially in large deployments.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: argocd-application-controller

spec:

template:

spec:

containers:

- name: application-controller

env:

# Setting for changing the client qps

- name: ARGOCD_K8S_CLIENT_QPS

value: 50

# Setting for changing the burst qps

- name: ARGOCD_K8S_CLIENT_BURST

value: 1003. Reconciliation Frequency

-

timeout.reconciliation- Purpose: This setting controls the frequency at which the Argo CD application controller checks for differences between the desired state defined in the Git repository and the actual state of the applications deployed in Kubernetes. Essentially, it determines how often Argo CD attempts to reconcile or synchronize the state of applications.

- Functionality: Setting a value for

timeout.reconciliationspecifies the minimum interval between consecutive reconciliations for a given application. For example, if it’s set to180s, Argo CD will wait at least 180 seconds after completing a reconciliation cycle before it starts another cycle for the same application, regardless of whether there were any changes in the Git repository. - Impact: This setting helps in managing the load on the Kubernetes API and the Argo CD server by preventing too frequent reconciliation attempts, which can be particularly useful in large deployments or in environments with resource constraints.

timeout.hard.reconciliation- Purpose: This setting defines a hard limit on the duration that a single reconciliation operation can take. If the reconciliation process exceeds this duration, it is forcibly terminated.

- Functionality:

timeout.hard.reconciliationacts as a safeguard against reconciliation operations that run indefinitely due to errors or complex configurations that cannot be resolved in a reasonable time. It ensures that resources are not indefinitely consumed by stuck processes. - Impact: The hard timeout is critical for maintaining the overall health and responsiveness of the Argo CD system. It prevents long-running operations from hogging system resources and helps in maintaining predictable operation timings.

- Default: 0 (this means no time limit is set)

apiVersion: v1

kind: ConfigMap

metadata:

name: argocd-cm

namespace: argocd

data:

timeout.reconciliation: "60s"

timeout.hard.reconciliation: "0s"

4. Application Controller Sharding

-

Sharding the Application Controller: This can be done either manually or automatically to distribute the load more evenly across multiple instances of the application controller. This is particularly useful in very large environments.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: Argo-cd-application-controller

spec:

template:

spec:

containers:

- name: application-controller

env:

# Setting for changing the application controller sharding

- name: Argo CD_CONTROLLER_REPLICAS

value: 10

5. Sync Window and Sync Policy:

-

Sync Policy: Controls whether sync is automatic or manual, and whether it should prune or self-heal. Configuring sync strategies effectively can help in managing how changes are rolled out across the clusters.

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: example-application namespace: argocd spec: syncPolicy: automated: prune: true selfHeal: true -

Sync Windows: Define specific time windows when synchronization can occur, which can be used to prevent sync during peak times or to align with maintenance windows.

Example:apiVersion: argoproj.io/v1alpha1 kind: AppProject metadata: name: default spec: syncWindows: - kind: allow schedule: '10 1 * * *' duration: 1h applications: - '*-prod' manualSync: true - kind: deny schedule: '0 22 * * *' timeZone: "Europe/Amsterdam" duration: 1h namespaces: - default - kind: allow schedule: '0 23 * * *' duration: 1h clusters: - in-cluster - cluster1kind: Specifies the type of window. In this case, "allow" permits syncs to happen in the specified times. You can also use "deny" to block syncs during certain periods.schedule: Defines when the window is active. This field uses the standard cron format to specify times. In the example:"0 22 * * 1-5"translates to starting at 10:00 PM every weekday (Monday to Friday)."0 0 * * 0,6"means all day on Saturday and Sunday.

duration: Indicates how long the window stays open once it starts. In the example, the first window stays open for 2 hours and the weekend window for 24 hours each day.

My takes on the recommendations

Here are my takeaways from the article:

- Reconciliation Timeout: Increasing the reconciliation timeout from the default 3 minutes to 6 minutes proved effective in ensuring timely processing of applications, leading to better queue management.

- Client QPS Settings: Modifying the client QPS/burst QPS settings had a significant impact on reducing sync times, indicating faster processing of applications. Monitoring of the Kubernetes API server is recommended when adjusting these settings.

- Sharding: Sharding the application controller, either manually or automatically, showed substantial improvements in sync times and reconciliation queue clear out, enabling more efficient distribution of workload across multiple instances.

- Status/Operation Processor Settings: Although adjusting these settings did not yield significant differences in the experiments, it's still advised to examine them, as they may influence scalability in different scenarios.

Optimizing Argo CD for scalability on Amazon EKS requires a combination of adjusting configuration settings like reconciliation timeout, client QPS, and sharding the application controller. These recommendations aim to address the challenges of efficiently managing large-scale deployments while maintaining performance and reliability. Further experiments and optimizations are planned to enhance Argo CD's scalability even further.

well written article. Now I understand ArgoCD much better