Comparing Contour, Emissary-ingress, and Ingress Nginx: Your Guide to Ingress Controllers

If you're considering using an open source ingress controller for your Kubernetes cluster, you're probably wondering which one is right for you. This post will compare three popular ingress controllers - Contour, Emissary-ingress, and Ingress Nginx (SIG version) - to help you make an informed decision.

We will not do any performance testing and will not get into great detail about the features those three are providing, but rather look at the high level capabilities and discuss the differences.

Good to mention, all three are projects are donated to CNCF. Personally, I've mostly worked with SIG ingress-nginx and it is a very nice tool if you have previous experience with Nginx since you will be familiar with the key features.

What I find exciting is the CRD approach the Contour and Emissary ingress controllers are taking with Envoy where we can controll the implementation with custom resources instead of creating ingress objects.

Overview of Ingress Controllers

An ingress controller is a component that manages external access to services within a Kubernetes cluster. Generally, it acts as a reverse proxy, directing incoming traffic to the correct service based on the URL path or domain name.

Envoy forms the backbone of both Contour and Emissary-ingress. This high-performance proxy is designed for cloud-native applications and offers features such as load balancing, circuit breaking, and traffic management. Using an ingress controller built atop Envoy means these features are readily available, saving you time and effort in configuration. You might recognize Envoy from service mesh projects like Istio, Linkerd, and Consul.

Ingress-nginx Controller

For ingress-nginx controller, you define routing rules using the Kubernetes Ingress resource. Each Ingress resource can include a set of rules for routing external HTTP/HTTPS traffic to services, based on the requested hostname and path.

For example, if you want to route traffic for devoriales.com to a service called "example-service" on port 8080, you would create an Ingress like this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: devoriales-ingress

spec:

rules:

- host: "devoriales.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: example-service

port:

number: 8080

To enhance the standard Ingress resource functionality, the ingress-nginx uses annotations and ConfigMaps. Annotations allow customization of specific ingress behavior, while ConfigMaps permit global configuration changes across all ingress resources.

Envoy-based Ingress Controllers

Envoy-based ingress controllers are compatible with the Gateway API framework. To clarify their interaction within Kubernetes, I've created an diagram illustrating the components and workflow of the Gateway API:

+-------------------+

| GatewayClass |

| (LoadBalancer |

| configuration) |

+---------+---------+

|

v

+---------+---------+

| Gateway |

| (Actual instance) |

+---------+---------+

|

+---------------------+---------------------+

| | |

v v v

+-------+-------+ +-------+-------+ +-------+-------+

| HTTPRoute | | TCPRoute | | TLSRoute |

| (HTTP routing) | | (TCP routing) | | (TLS routing) |

+-------+-------+ +-------+-------+ +-------+-------+

| | |

v v v

+-------+-------+ +-------+-------+ +-------+-------+

| Services | | Services | | Services |

| (Backend Pods)| | (Backend Pods)| | (Backend Pods)|

+---------------+ +---------------+ +---------------+

-

GatewayClass: Defines a type of gateway you can create, which dictates the underlying technology (like a specific LoadBalancer) used for traffic routing.

-

Gateway: Represents an instance of a gateway as defined by a GatewayClass, acting as the entry point for traffic.

-

Routes (HTTPRoute, TCPRoute, TLSRoute): Define how traffic through a Gateway should be routed to backend services. Each type targets specific protocols.

-

Services (Backend Pods): The ultimate destination for traffic, typically a set of Pods running the application logic.

Contour, for instance, uses a custom resource called HTTPProxy to define routing rules. This HTTPProxy resource offers a more extensive feature set compared to the Ingress resource.

If you want to route traffic for devoriales.com to example-service on port 8080 using Contour, you would create an HTTPProxy like this:

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: devoriales-proxy

namespace: default

spec:

virtualhost:

fqdn: devoriales.com

routes:

- conditions:

- prefix: /

services:

- name: example-service

port: 8080

Both Contour and Emissary-ingress utilize custom resource definitions (CRDs) to define routing rules and other configurations.

These CRDs enable more fine-grained control, allowing you to define complex routing behaviors, security policies, and more. They offer a Kubernetes-native way to specify these configurations, ensuring they integrate smoothly into the Kubernetes ecosystem.

Still, a cool thing is, both also support Kubernetes Ingress objects, but then with limitations since you cannot define all configurations like that.

Comparison Table

| Ingress controller | Tech | HTTP/2 support | TCP/UDP support | Advanced LB algorithms | TLS termination | Rate Limiting | Request/response modification | Customizable load balancing |

| Contour | Envoy | yes | no | yes | yes | yes (via third party rate limiting service) | yes | yes |

| Emissary-ingress | Envoy | yes | yes | yes | yes | yes (via third party rate limiting service) | yes | yes |

| Ingress Nginx (SIG version) | Nginx Web Server | yes | Yes (via ConfigMaps) | yes | yes | yes | yes | yes |

Just to clarify the table:

Request/Response Modification; This refers to the capability of a controller to make changes, to the HTTP request or response as it goes through. These modifications can include adding, modifying or removing headers adjusting the URL path or making changes to the properties of the HTTP request or response.

TCP/UDP Support; TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) are two used protocols for transmitting packets over the internet. They serve purposes. TCP is used when reliability is crucial (such as loading a webpage) while UDP is used when speed matters (like in a video stream). In terms of a controller TCP/UDP support means that the controller can direct traffic not based on HTTP rules, like path or host but also based on the TCP/UDP port that the request is sent to. This feature comes in handy when you have HTTP services running in your Kubernetes cluster. For instance lets say you have a database running internally that you want to expose. With TCP/UDP support enabled you can configure your controller to route traffic coming to an IP and port directly to your database

Advanced Load Balancing (LB) Algorithms; Load balancing refers to the process of distributing network traffic across servers to ensure that no single server is overwhelmed. This enhances the reliability and availability of applications. While basic load balancing distributes traffic evenly advanced load balancing algorithms consider factors, such, as;

- Least Connections; This method directs traffic to the server with the connections. It proves beneficial in situations where requests create varying loads on servers

- IP Hash; With this method clients with the IP address are consistently directed to the available server. It aids in maintaining user sessions

- URL Hash; Using this method ensures that requests for the URL are always directed to the available server. It proves useful for caching purposes

Rate Limiting; Rate limiting is a technique used to control network traffic by setting limits on how requests a client can make within a time frame. For example you can configure your controller to allow up to 100 requests per minute from each IP address, for a particular service.

If an IP address exceeds this threshold any additional requests it makes within that minute will be.. Delayed. This helps safeguard your services from becoming overwhelmed due, to requests.

Flexible Load Balancing; This implies that you have the ability to customize how traffic is distributed among your services using the controller. It could be as simple as adjusting the priority of services (such as sending traffic to some than others) or it could involve implementing your own load balancing algorithm. For instance you might have a service that's faster but more expensive and another service that is slower but cheaper. With load balancing you can configure your controller to direct 70% of traffic to the affordable service and 30% to the faster one. Remember, not all of these features will be applicable in every scenario. It's important to consider which features are necessary, for your application when selecting a controller.

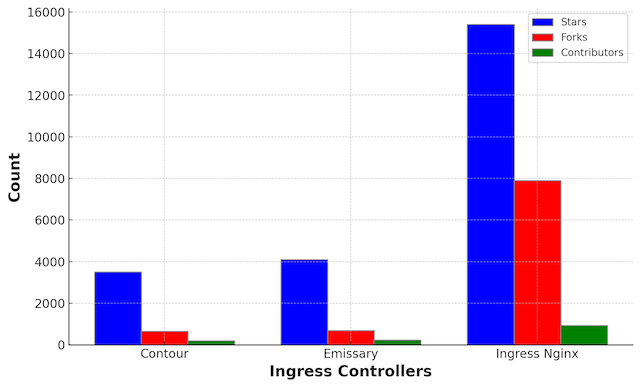

Usage numbers and popularity

Popularity can be measured in terms of the number of GitHub stars, forks, and contributors. The diagrams below present the figures at the time of writing this article:

Ingress Nginx (SIG version) leads in all three categories, indicating a larger user base and an active community of contributors.

Emissary and Contour also have a large number of stars, forks, and contributors, but less than ingress-nginx. Given that Nginx has been around for many years and is widely used, this is not surprising.

However, a large difference in popularity doesn't necessarily mean you should automatically choose the most popular option. Nginx has been around for many years and is widely used by many organizations. Although I personally use ingress-nginx in my current role, I still find both Contour and Emissary intriguing, especially given their use of the CRD approach. This feels like a more modern way to customize ingress controllers and rules.

Contour Ingress Controller

Contour is an open-source technology ingress controller and is built upon Envoy. What sets it apart is its user-friendliness and high scalability, making it an excellent choice for handling extensive Kubernetes deployments.

One of Contour's standout features is its support for HTTP/2. With this, you can expect a more efficient performance and less latency compared to the older HTTP/1.1 version. Plus, it offers TLS termination, enabling you to encrypt traffic flowing between the client and the ingress controller.

First, you'll need to add the Contour Helm repository:

# add repository

helm repo add bitnami https://charts.bitnami.com/bitnami

# update the repository

helm repo update

# installation

helm install my-release bitnami/contour --namespace projectcontour --create-namespaceContour can work with both the standard Kubernetes Ingress resource and its own custom resource, HTTPProxy. The main difference is that the HTTPProxy resource is specific to Contour and can unlock more advanced features.

Here's an example of how you might configure Contour to route traffic to a service. This configuration routes traffic coming to devoriales.com/foo to the foo service and traffic coming to devoriales.com/bar to the bar service:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

spec:

rules:

- host: devoriales.com

http:

paths:

- path: /foo

backend:

serviceName: foo

servicePort: 80

- path: /bar

backend:

serviceName: bar

servicePort: 80

You can also achieve the same by creating the HTTPProxy object:

apiVersion: projectcontour.io/v1

kind: HTTPProxy

metadata:

name: example-proxy

spec:

virtualhost:

fqdn: devoriales.com

routes:

- conditions:

- prefix: /foo

services:

- name: foo

port: 80

- conditions:

- prefix: /bar

services:

- name: bar

port: 80

This example accomplishes the same routing as the Ingress example, but it uses Contour's HTTPProxy resource instead of the standard Ingress resource. The conditions field under routes defines the path prefixes that should be routed to each service, similar to the paths in the Ingress resource.

Emissary-Ingress

Emissary-ingress, formerly known as Ambassador, was developed by Datawire. It's an open-source ingress controller designed to be highly customisable.

It should not be mixed up with a commercial payed product called Ambassador Edge Stack that has some built in features like rate limit, authentication etc.

Read more about the feature comparisons here

Installing Emissary-ingress with Helm

# add repo

helm repo add datawire https://www.getambassador.io

# update the repository

helm repo update

# install

helm install emissary-ingress datawire/emissary-ingress

Here's an example of how you might configure Emissary-ingress to route traffic to a service. This configuration routes traffic coming to devoriales.com/foo to the foo service and traffic coming to devoriales.com/bar to the bar service:

apiVersion: emissary-ingress.io/v1alpha1

kind: Host

metadata:

name: devoriales.com

spec:

routes:

- name: foo

path: /foo

service: foo

port: 80

- name: bar

path: /bar

service: bar

port: 80

Ingress Nginx (SIG version)

The ingress-nginx (SIG version) is a community-driven project under the Kubernetes SIG-Network group, while the NGINX Ingress Controller for Kubernetes is the official version supported by NGINX Inc., a part of F5 Networks. The official version offers commercial support, integrates with other NGINX products, and may include features not available in the SIG version. However, both serve similar purposes and provide extensive configuration options. In my opinion, the SIG version integrates nicer with Kubernetes, but that will not be a topic for this post.

One key feature of Ingress Nginx is its support for advanced load balancing algorithms, such as round-robin and least connections. It also supports rate limiting and request/response modification.

Installing Ingress Nginx with Helm

# add ingress-nginx repository

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

# update the repository

helm repo update

# install

helm install ingress-nginx ingress-nginx/ingress-nginx

Here's an example of how you might configure Ingress Nginx to route traffic to a service. This configuration routes traffic coming to devoriales.com/foo to the foo service and traffic coming to devoriales.com/bar to the bar service

Gateway API

The Gateway API is an evolution of the Kubernetes Ingress and Service APIs, aiming to provide richer and more flexible network routing options. This includes enhanced support for multi-tenant scenarios, more expressive traffic routing rules, and expanded protocol support.

You can read more about Gateway API project here

Use Case: Improved Multi-Tenancy with Gateway API

Consider a scenario where a company operates with teams each deploying their applications, in a shared Kubernetes cluster. However managing access and routing rules can become complicated using Ingress. To address this the Gateway API offers improved separation and isolation, between teams.

To illustrate individual teams can now define their HTTPRoute resources to specify how their services should be exposed. These route resources are then linked to a Gateway, which is overseen by the cluster administrators.

apiVersion: networking.x-k8s.io/v1alpha1

kind: Gateway

metadata:

name: common-gateway

namespace: infra

spec:

gatewayClassName: acme-lb

listeners:

- protocol: HTTP

port: 80

routes:

kind: HTTPRoute

selector:

matchLabels:

gateway: common-gateway

namespaces:

from: All

---

apiVersion: networking.x-k8s.io/v1alpha1

kind: HTTPRoute

metadata:

name: team-a-route

namespace: team-a

labels:

gateway: common-gateway

spec:

rules:

- matches:

- path:

type: Prefix

value: /app1

forwardTo:

- serviceName: app1

port: 8080

In this example, the common Gateway selects all HTTPRoute resources across all namespaces labeled with gateway: common-gateway. The HTTPRoute defined by team A routes traffic to its app1 service. This clear separation of roles and isolation provides a safer and more manageable multi-tenant environment.

The Gateway API, thus, offers significant benefits in terms of safety, flexibility, and expressiveness, enabling more.

The following page tracks downstream implementations and integrations of Gateway API for many tools including ingress controllers:

https://gateway-api.sigs.k8s.io/implementations/#nginx-kubernetes-gateway

Summary

Contour and Emissary-ingress, both built on Envoy, provide a rich feature set and the power of custom resources. On the other hand, Ingress Nginx (SIG version) might be a more comfortable choice for those already versed in Nginx configurations, offering a broader community support due to its longer existence.

It's important to keep in mind that these options are not mutually exclusive. You can actually combine controllers to meet specific application needs, as long as you manage these combinations properly.

In the end your decision on which ingress controller to choose should be based on your particular use case your teams expertise and the features that matter most to you. The future of controllers looks promising with ongoing developments, in the Kubernetes ecosystem focused on enhancing flexibility, safety and ease of use. So no matter what option you go for today always stay aware of the evolving landscape of Kubernetes controllers.

About the Author

Aleksandro Matejic, a Cloud Architect, began working in the IT industry over 21 years ago as a technical specialist, right after his studies. Since then, he has worked in various companies and industries in various system engineer and IT architect roles. He currently works on designing Cloud solutions, Kubernetes, and other DevOps technologies.

In his spare time, Aleksandro works on different development projects such as developing devoriales.com, a blog and learning platform launching in 2022/2023. In addition, he likes to read and write technical articles about software development and DevOps methods and tools.

You can contact Aleksandro by visiting his LinkedIn Profile